The Next Five Things To Instantly Do About Deepseek

본문

How has DeepSeek affected world AI improvement? Additionally, there are fears that the AI system could be used for international influence operations, spreading disinformation, surveillance, and the development of cyberweapons for the Chinese government. Experts point out that whereas DeepSeek's cost-efficient model is impressive, it does not negate the crucial position Nvidia's hardware plays in AI improvement. Listed below are some examples of how to use our mannequin. Enroll right here to get it in your inbox every Wednesday. 64k extrapolation not dependable right here. Nvidia's inventory bounced again by virtually 9% on Tuesday, signaling renewed confidence in the company's future. What are deepseek ai's future plans? Some sources have observed the official API version of DeepSeek's R1 mannequin uses censorship mechanisms for matters thought-about politically sensitive by the Chinese government. However, too massive an auxiliary loss will impair the model performance (Wang et al., 2024a). To attain a greater commerce-off between load stability and model performance, we pioneer an auxiliary-loss-free load balancing technique (Wang et al., 2024a) to ensure load stability. Today, we'll find out if they will play the sport in addition to us, ديب سيك مجانا as effectively.

How has DeepSeek affected world AI improvement? Additionally, there are fears that the AI system could be used for international influence operations, spreading disinformation, surveillance, and the development of cyberweapons for the Chinese government. Experts point out that whereas DeepSeek's cost-efficient model is impressive, it does not negate the crucial position Nvidia's hardware plays in AI improvement. Listed below are some examples of how to use our mannequin. Enroll right here to get it in your inbox every Wednesday. 64k extrapolation not dependable right here. Nvidia's inventory bounced again by virtually 9% on Tuesday, signaling renewed confidence in the company's future. What are deepseek ai's future plans? Some sources have observed the official API version of DeepSeek's R1 mannequin uses censorship mechanisms for matters thought-about politically sensitive by the Chinese government. However, too massive an auxiliary loss will impair the model performance (Wang et al., 2024a). To attain a greater commerce-off between load stability and model performance, we pioneer an auxiliary-loss-free load balancing technique (Wang et al., 2024a) to ensure load stability. Today, we'll find out if they will play the sport in addition to us, ديب سيك مجانا as effectively.

As well as, for DualPipe, neither the bubbles nor activation reminiscence will improve because the variety of micro-batches grows. Actually, the emergence of such efficient models could even broaden the market and ultimately increase demand for Nvidia's superior processors. I prefer to keep on the ‘bleeding edge’ of AI, but this one got here faster than even I was ready for. Right now no one truly is aware of what DeepSeek’s lengthy-term intentions are. The unveiling of DeepSeek’s V3 AI mannequin, developed at a fraction of the cost of its U.S. At a supposed value of just $6 million to prepare, DeepSeek’s new R1 mannequin, launched last week, was in a position to match the efficiency on a number of math and reasoning metrics by OpenAI’s o1 mannequin - the end result of tens of billions of dollars in funding by OpenAI and its patron Microsoft. MLA ensures efficient inference by considerably compressing the key-Value (KV) cache right into a latent vector, while DeepSeekMoE enables coaching robust fashions at an economical value by means of sparse computation. 4096 for instance, in our preliminary test, the limited accumulation precision in Tensor Cores results in a most relative error of practically 2%. Despite these issues, the limited accumulation precision continues to be the default option in a couple of FP8 frameworks (NVIDIA, 2024b), severely constraining the training accuracy.

As well as, for DualPipe, neither the bubbles nor activation reminiscence will improve because the variety of micro-batches grows. Actually, the emergence of such efficient models could even broaden the market and ultimately increase demand for Nvidia's superior processors. I prefer to keep on the ‘bleeding edge’ of AI, but this one got here faster than even I was ready for. Right now no one truly is aware of what DeepSeek’s lengthy-term intentions are. The unveiling of DeepSeek’s V3 AI mannequin, developed at a fraction of the cost of its U.S. At a supposed value of just $6 million to prepare, DeepSeek’s new R1 mannequin, launched last week, was in a position to match the efficiency on a number of math and reasoning metrics by OpenAI’s o1 mannequin - the end result of tens of billions of dollars in funding by OpenAI and its patron Microsoft. MLA ensures efficient inference by considerably compressing the key-Value (KV) cache right into a latent vector, while DeepSeekMoE enables coaching robust fashions at an economical value by means of sparse computation. 4096 for instance, in our preliminary test, the limited accumulation precision in Tensor Cores results in a most relative error of practically 2%. Despite these issues, the limited accumulation precision continues to be the default option in a couple of FP8 frameworks (NVIDIA, 2024b), severely constraining the training accuracy.

All bells and whistles apart, the deliverable that issues is how good the models are relative to FLOPs spent. It comprises 236B complete parameters, of which 21B are activated for each token, and supports a context length of 128K tokens. The paper introduces DeepSeekMath 7B, a big language model that has been pre-educated on a massive amount of math-related information from Common Crawl, totaling one hundred twenty billion tokens. At every attention layer, info can move forward by W tokens. By improving code understanding, era, and enhancing capabilities, the researchers have pushed the boundaries of what large language fashions can achieve within the realm of programming and mathematical reasoning. Abstract:We present deepseek ai china-V2, a strong Mixture-of-Experts (MoE) language mannequin characterized by economical coaching and environment friendly inference. First, they effective-tuned the DeepSeekMath-Base 7B model on a small dataset of formal math problems and their Lean four definitions to acquire the initial model of DeepSeek-Prover, their LLM for proving theorems. Their outputs are based on a huge dataset of texts harvested from internet databases - some of which include speech that's disparaging to the CCP.

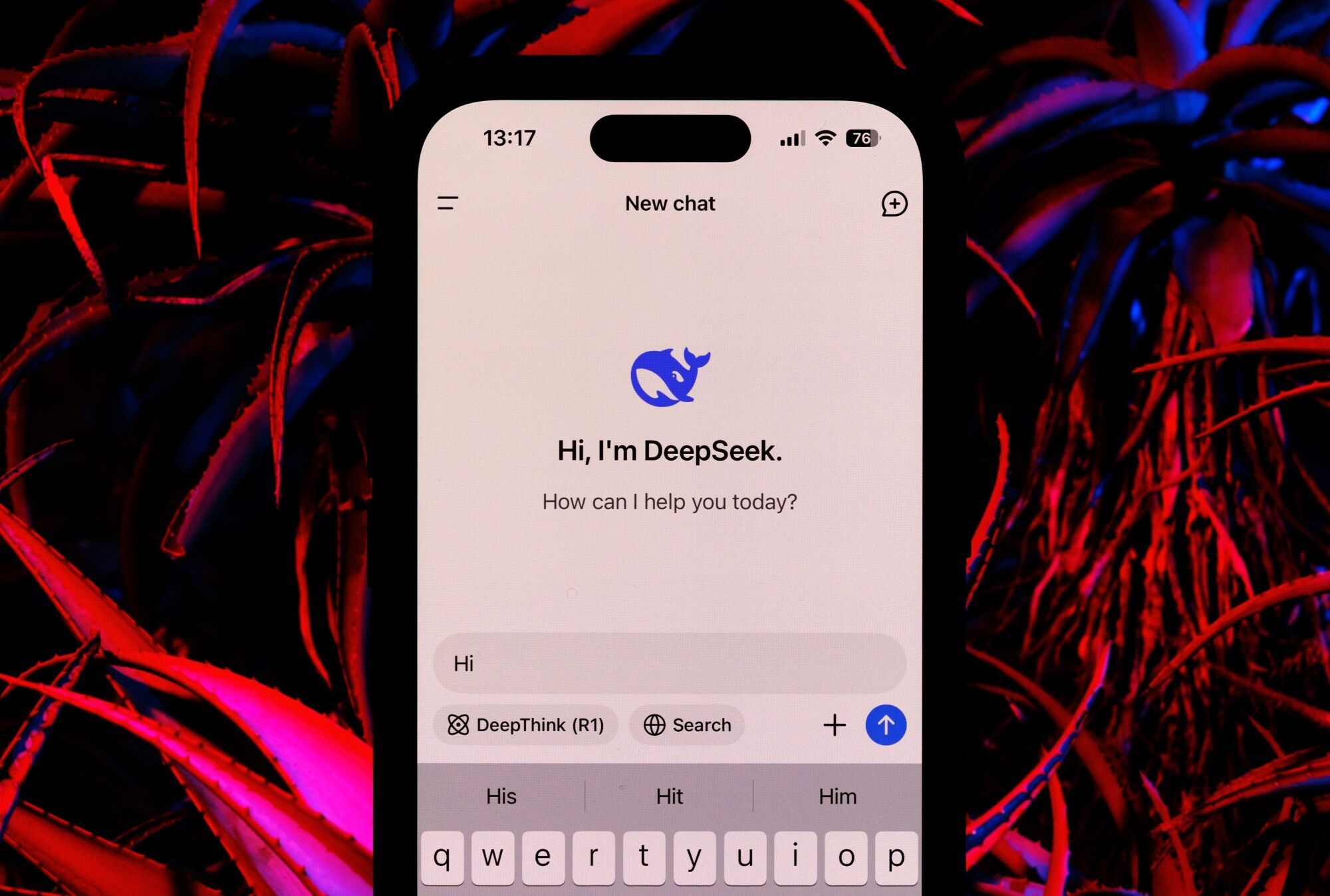

I assume that most people who still use the latter are newbies following tutorials that have not been up to date but or possibly even ChatGPT outputting responses with create-react-app instead of Vite. A brand new Chinese AI mannequin, created by the Hangzhou-based startup DeepSeek, has stunned the American AI business by outperforming some of OpenAI’s main models, displacing ChatGPT at the top of the iOS app retailer, and usurping Meta as the leading purveyor of so-known as open supply AI tools. The present "best" open-weights models are the Llama three sequence of models and Meta seems to have gone all-in to prepare the very best vanilla Dense transformer. Best outcomes are shown in daring. Evaluation results show that, even with only 21B activated parameters, DeepSeek-V2 and its chat variations still obtain prime-tier efficiency among open-supply models. This overlap ensures that, as the model further scales up, as long as we maintain a continuing computation-to-communication ratio, we will nonetheless make use of wonderful-grained consultants throughout nodes while reaching a close to-zero all-to-all communication overhead. It’s clear that the crucial "inference" stage of AI deployment still closely depends on its chips, reinforcing their continued importance in the AI ecosystem. Sam: It’s interesting that Baidu seems to be the Google of China in many ways.

댓글목록0