Deepseek Once, Deepseek Twice: Three Explanation why You Should not De…

본문

Their flagship offerings include its LLM, which is available in varied sizes, and DeepSeek Coder, a specialized model for programming tasks. In his keynote, Wu highlighted that, whereas giant models final year have been restricted to aiding with simple coding, they have since advanced to understanding more complex requirements and dealing with intricate programming tasks. An object count of 2 for Go versus 7 for Java for such a easy instance makes comparing coverage objects over languages inconceivable. I believe considered one of the large questions is with the export controls that do constrain China's entry to the chips, which you'll want to fuel these AI techniques, is that gap going to get larger over time or not? With much more diverse cases, that could extra seemingly lead to dangerous executions (suppose rm -rf), and extra fashions, we needed to deal with each shortcomings. Introducing new real-world instances for the write-tests eval activity introduced additionally the potential of failing take a look at cases, which require further care and assessments for quality-primarily based scoring. With the new cases in place, having code generated by a model plus executing and scoring them took on common 12 seconds per model per case. Another example, generated by Openchat, presents a take a look at case with two for loops with an extreme quantity of iterations.

The following take a look at generated by StarCoder tries to read a value from the STDIN, blocking the entire evaluation run. Upcoming variations of DevQualityEval will introduce more official runtimes (e.g. Kubernetes) to make it easier to run evaluations on your own infrastructure. Which will also make it potential to find out the standard of single assessments (e.g. does a check cowl something new or does it cowl the identical code as the previous take a look at?). We began building DevQualityEval with preliminary support for OpenRouter because it gives an enormous, ever-rising collection of models to query through one single API. A single panicking check can due to this fact result in a really dangerous score. Blocking an mechanically working take a look at suite for guide input should be clearly scored as bad code. That is bad for an evaluation since all exams that come after the panicking check aren't run, and even all checks earlier than don't obtain coverage. Assume the mannequin is supposed to put in writing tests for source code containing a path which leads to a NullPointerException.

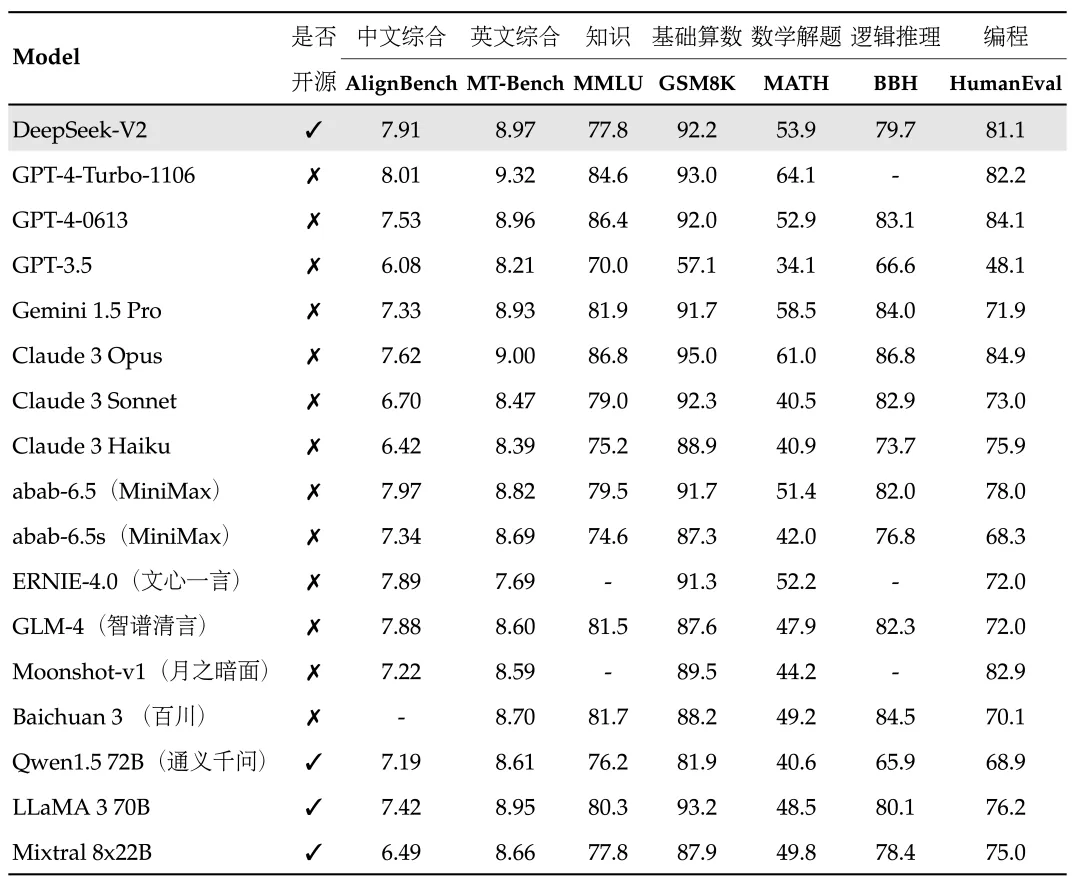

To partially address this, we make sure that all experimental results are reproducible, storing all information which can be executed. The test circumstances took roughly 15 minutes to execute and produced 44G of log files. Provide a passing take a look at through the use of e.g. Assertions.assertThrows to catch the exception. With these exceptions famous within the tag, we will now craft an attack to bypass the guardrails to attain our goal (utilizing payload splitting). Such exceptions require the first possibility (catching the exception and Deepseek AI Online chat passing) for the reason that exception is a part of the API’s conduct. From a builders point-of-view the latter choice (not catching the exception and failing) is preferable, since a NullPointerException is normally not wished and the check therefore factors to a bug. As a software program developer we would never commit a failing check into manufacturing. That is true, but looking at the results of hundreds of models, we will state that fashions that generate test cases that cowl implementations vastly outpace this loophole. C-Eval: A multi-stage multi-self-discipline chinese analysis suite for basis models. Since Go panics are fatal, they aren't caught in testing instruments, i.e. the check suite execution is abruptly stopped and there isn't any protection. Otherwise a test suite that contains just one failing check would obtain zero protection factors as well as zero points for being executed.

To partially address this, we make sure that all experimental results are reproducible, storing all information which can be executed. The test circumstances took roughly 15 minutes to execute and produced 44G of log files. Provide a passing take a look at through the use of e.g. Assertions.assertThrows to catch the exception. With these exceptions famous within the tag, we will now craft an attack to bypass the guardrails to attain our goal (utilizing payload splitting). Such exceptions require the first possibility (catching the exception and Deepseek AI Online chat passing) for the reason that exception is a part of the API’s conduct. From a builders point-of-view the latter choice (not catching the exception and failing) is preferable, since a NullPointerException is normally not wished and the check therefore factors to a bug. As a software program developer we would never commit a failing check into manufacturing. That is true, but looking at the results of hundreds of models, we will state that fashions that generate test cases that cowl implementations vastly outpace this loophole. C-Eval: A multi-stage multi-self-discipline chinese analysis suite for basis models. Since Go panics are fatal, they aren't caught in testing instruments, i.e. the check suite execution is abruptly stopped and there isn't any protection. Otherwise a test suite that contains just one failing check would obtain zero protection factors as well as zero points for being executed.

By incorporating the Fugaku-LLM into the SambaNova CoE, the impressive capabilities of this LLM are being made available to a broader audience. If extra check circumstances are needed, we are able to all the time ask the model to put in writing extra primarily based on the prevailing circumstances. Giving LLMs extra room to be "creative" when it comes to writing assessments comes with a number of pitfalls when executing assessments. On the other hand, one might argue that such a change would profit fashions that write some code that compiles, however does not truly cowl the implementation with exams. Iterating over all permutations of a data construction exams a lot of circumstances of a code, but does not symbolize a unit take a look at. Some LLM responses were wasting a lot of time, both by utilizing blocking calls that will totally halt the benchmark or by producing excessive loops that would take virtually a quarter hour to execute. We will now benchmark any Ollama mannequin and DevQualityEval by either utilizing an present Ollama server (on the default port) or by starting one on the fly automatically.

If you adored this article and you would certainly like to obtain even more details pertaining to deepseek français kindly check out our own web site.

댓글목록0