5 Most Well Guarded Secrets About Deepseek

본문

DeepSeek was founded lower than two years ago by the Chinese hedge fund High Flyer as a analysis lab dedicated to pursuing Artificial General Intelligence, or AGI. Additionally, he noted that DeepSeek-R1 typically has longer-lived requests that can final two to 3 minutes. There are several model variations available, some that are distilled from DeepSeek-R1 and V3. We have no motive to imagine the net-hosted versions would respond differently. All the hyperscalers, including Microsoft, AWS and Google, have AI platforms. The market of AI infrastructure platforms is fiercely aggressive. DeepSeek’s launch of its R1 mannequin in late January 2025 triggered a sharp decline in market valuations throughout the AI worth chain, from mannequin builders to infrastructure suppliers. AI models, as a risk to the sky-high development projections that had justified outsized valuations. Reducing hallucinations: The reasoning process helps to verify the outputs of models, thus lowering hallucinations, which is necessary for purposes the place accuracy is essential.

DeepSeek was founded lower than two years ago by the Chinese hedge fund High Flyer as a analysis lab dedicated to pursuing Artificial General Intelligence, or AGI. Additionally, he noted that DeepSeek-R1 typically has longer-lived requests that can final two to 3 minutes. There are several model variations available, some that are distilled from DeepSeek-R1 and V3. We have no motive to imagine the net-hosted versions would respond differently. All the hyperscalers, including Microsoft, AWS and Google, have AI platforms. The market of AI infrastructure platforms is fiercely aggressive. DeepSeek’s launch of its R1 mannequin in late January 2025 triggered a sharp decline in market valuations throughout the AI worth chain, from mannequin builders to infrastructure suppliers. AI models, as a risk to the sky-high development projections that had justified outsized valuations. Reducing hallucinations: The reasoning process helps to verify the outputs of models, thus lowering hallucinations, which is necessary for purposes the place accuracy is essential.

No less than, in line with Together AI, the rise of DeepSeek and open-source reasoning has had the precise opposite effect: Instead of reducing the necessity for infrastructure, it is increasing it. Whether or not that bundle of controls shall be effective remains to be seen, but there's a broader point that each the present and incoming presidential administrations need to understand: speedy, simple, and frequently up to date export controls are way more prone to be more practical than even an exquisitely complex well-defined coverage that comes too late. Tremendous person demand for DeepSeek-R1 is further driving the need for more infrastructure. However, Prakash defined, Together AI has grown its infrastructure partly to help assist increased demand of DeepSeek-R1 associated workloads. Prakash defined that agentic workflows, the place a single consumer request results in hundreds of API calls to complete a activity, are placing extra compute demand on Together AI’s infrastructure. To satisfy that demand, Together AI has rolled out a service it calls "reasoning clusters" that provision dedicated capacity, ranging from 128 to 2,000 chips, to run fashions at the very best efficiency.

No less than, in line with Together AI, the rise of DeepSeek and open-source reasoning has had the precise opposite effect: Instead of reducing the necessity for infrastructure, it is increasing it. Whether or not that bundle of controls shall be effective remains to be seen, but there's a broader point that each the present and incoming presidential administrations need to understand: speedy, simple, and frequently up to date export controls are way more prone to be more practical than even an exquisitely complex well-defined coverage that comes too late. Tremendous person demand for DeepSeek-R1 is further driving the need for more infrastructure. However, Prakash defined, Together AI has grown its infrastructure partly to help assist increased demand of DeepSeek-R1 associated workloads. Prakash defined that agentic workflows, the place a single consumer request results in hundreds of API calls to complete a activity, are placing extra compute demand on Together AI’s infrastructure. To satisfy that demand, Together AI has rolled out a service it calls "reasoning clusters" that provision dedicated capacity, ranging from 128 to 2,000 chips, to run fashions at the very best efficiency.

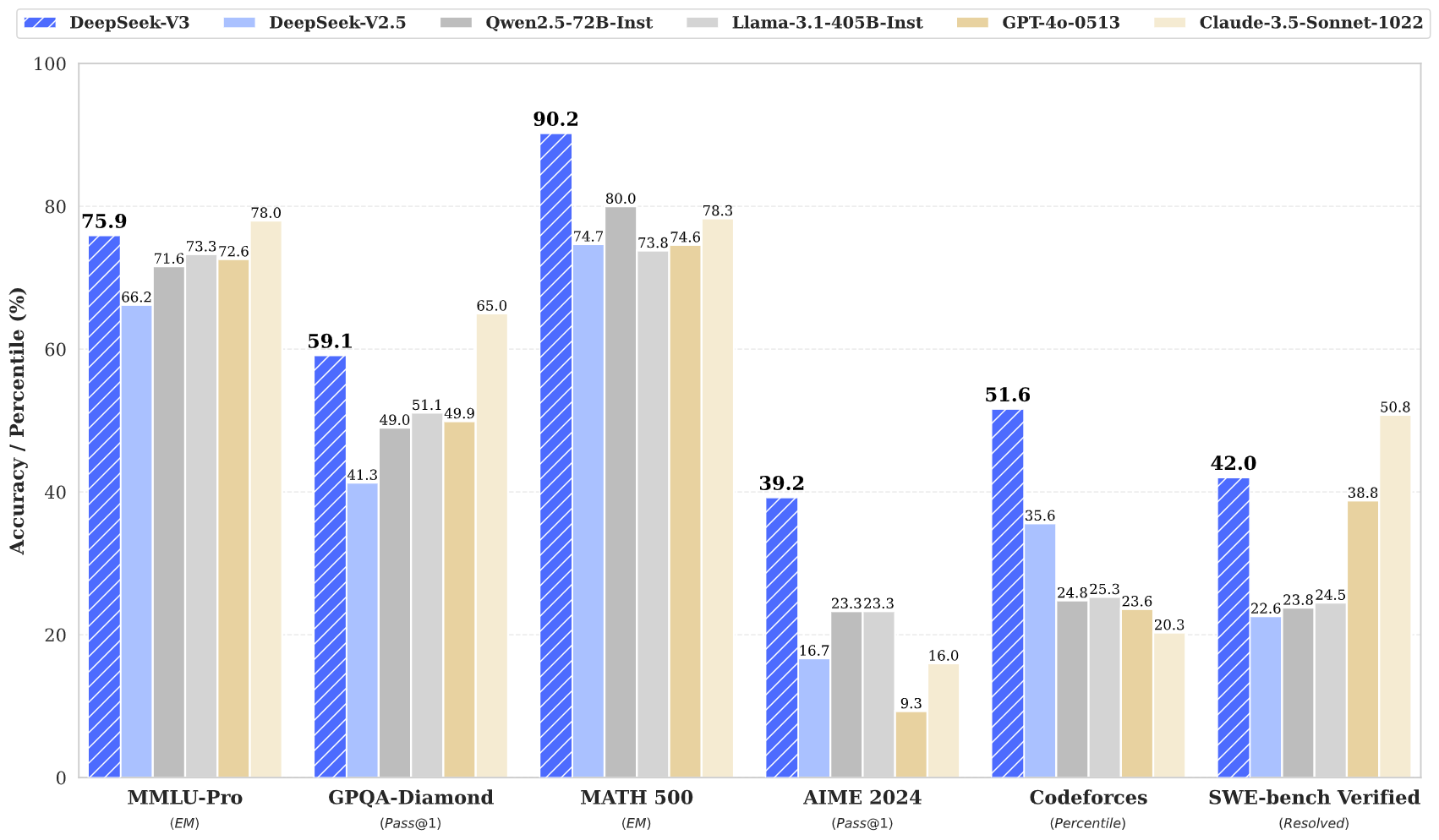

"It’s a fairly costly model to run inference on," he said. The Chinese mannequin is also cheaper for customers. Together AI can also be seeing increased infrastructure demand as its users embrace agentic AI. Together AI faces competition from each established cloud suppliers and AI infrastructure startups. Security researchers have discovered that DeepSeek sends knowledge to a cloud platform affiliated with ByteDance. Together AI has a full-stack offering, including GPU infrastructure with software platform layers on prime. DeepSeek-R1 was massively disruptive when it first debuted, for quite a few reasons - one of which was the implication that a number one edge open-supply reasoning model could possibly be constructed and deployed with much less infrastructure than a proprietary model. "For instance, we serve the DeepSeek-R1 mannequin at eighty five tokens per second and Azure serves it at 7 tokens per second," mentioned Prakash. Free DeepSeek-R1 is a worthy OpenAI competitor, particularly in reasoning-centered AI. At a supposed value of just $6 million to practice, DeepSeek’s new R1 mannequin, released last week, was capable of match the efficiency on a number of math and reasoning metrics by OpenAI’s o1 model - the outcome of tens of billions of dollars in investment by OpenAI and its patron Microsoft. DeepSeek v3 trained on 2,788,000 H800 GPU hours at an estimated value of $5,576,000.

The company claims Codestral already outperforms earlier fashions designed for coding tasks, together with CodeLlama 70B and Deepseek Coder 33B, and is being utilized by a number of trade companions, including JetBrains, SourceGraph and LlamaIndex. The company claims that its AI deployment platform has more than 450,000 registered builders and that the enterprise has grown 6X overall yr-over-yr. Prakash stated Nvidia Blackwell chips price around 25% more than the earlier era, but present 2X the efficiency. Some individuals declare that DeepSeek are sandbagging their inference price (i.e. losing cash on each inference call so as to humiliate western AI labs). The company’s customers include enterprises in addition to AI startups reminiscent of Krea AI, Captions and Pika Labs. This enables prospects to simply construct with open-supply models or develop their very own models on the Together AI platform. Improving non-reasoning models: Customers are distilling and bettering the standard of non-reasoning fashions. Damp %: A GPTQ parameter that impacts how samples are processed for quantisation. Or this, utilizing controlnet you can also make interesting text appear inside images which might be generated through diffusion models, a specific form of magic! "We are actually serving fashions across all modalities: language and reasoning and images and audio and video," Vipul Prakash, CEO of Together AI, told VentureBeat.

댓글목록0