Getting The most Effective Deepseek

본문

1. What's DeepSeek AI? Unlike other industrial analysis labs, exterior of possibly Meta, DeepSeek has primarily been open-sourcing its fashions. PCs, or PCs constructed to a sure spec to support AI models, will be capable of run AI fashions distilled from DeepSeek R1 domestically. Equally important, the structure specification needs to support a various range of structures relevant to present and future purposes. First, Deepseek AI Online chat effectivity ought to be the highest priority of LLM inference engines, and the structured generation assist should not decelerate the LLM service. All current open-supply structured technology solutions will introduce giant CPU overhead, resulting in a significant slowdown in LLM inference. Figure 1 exhibits that XGrammar outperforms current structured generation options by up to 3.5x on JSON schema workloads and as much as 10x on CFG-guided era duties. The figure beneath exhibits an instance of a CFG for nested recursive string arrays. Figure 2 shows that our answer outperforms present LLM engines up to 14x in JSON-schema era and as much as 80x in CFG-guided era. Thankfully, the AI software not only recognized the difficulty but also supplied a clear rationalization and answer. On top of the above two targets, the solution should be portable to enable structured generation applications in every single place.

In this publish, we introduce XGrammar, an open-supply library for environment friendly, versatile, and portable structured technology. One generally used example of structured technology is the JSON format. In many functions, we could additional constrain the construction using a JSON schema, which specifies the kind of every discipline in a JSON object and is adopted as a doable output format for GPT-4 in the OpenAI API. Constrained decoding is a standard approach to enforce the output format of an LLM. Structured technology permits us to specify an output format and enforce this format throughout LLM inference. Test inference speed and response high quality with pattern prompts. Modern LLM inference on the most recent GPUs can generate tens of hundreds of tokens per second in giant batch scenarios. " are allowed within the second decoding step. We are witnessing an thrilling period for big language models (LLMs). Cmath: Can your language model pass chinese elementary school math take a look at? Once again, let’s distinction this with the Chinese AI startup, Zhipu.

In this publish, we introduce XGrammar, an open-supply library for environment friendly, versatile, and portable structured technology. One generally used example of structured technology is the JSON format. In many functions, we could additional constrain the construction using a JSON schema, which specifies the kind of every discipline in a JSON object and is adopted as a doable output format for GPT-4 in the OpenAI API. Constrained decoding is a standard approach to enforce the output format of an LLM. Structured technology permits us to specify an output format and enforce this format throughout LLM inference. Test inference speed and response high quality with pattern prompts. Modern LLM inference on the most recent GPUs can generate tens of hundreds of tokens per second in giant batch scenarios. " are allowed within the second decoding step. We are witnessing an thrilling period for big language models (LLMs). Cmath: Can your language model pass chinese elementary school math take a look at? Once again, let’s distinction this with the Chinese AI startup, Zhipu.

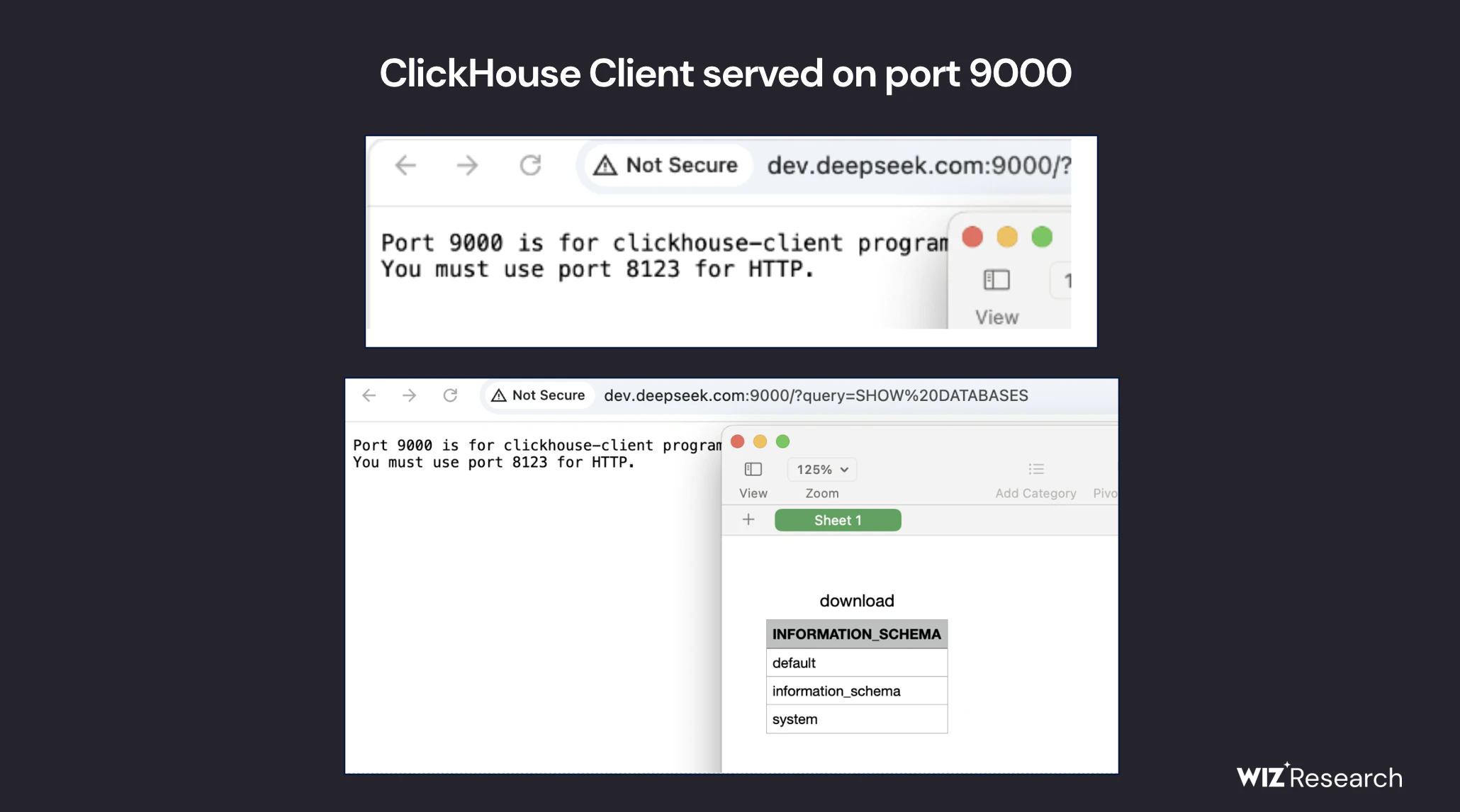

RedNote: what it’s like using the Chinese app TikTokers are flocking to Why everyone seems to be freaking out about DeepSeek DeepSeek’s top-ranked AI app is limiting signal-ups as a consequence of ‘malicious attacks’ US Navy jumps the DeepSeek ship. Open-source fashions like DeepSeek rely on partnerships to secure infrastructure whereas offering research expertise and technical developments in return. This instance walks you thru learn how to deploy and practice Deepseek fashions with dstack. DeepSeek Ai Chat R1 excels at step-by-step reasoning by way of duties, making it best for advanced queries that require detailed analysis. It’s fascinating how they upgraded the Mixture-of-Experts architecture and a spotlight mechanisms to new versions, making LLMs extra versatile, cost-efficient, and able to addressing computational challenges, dealing with lengthy contexts, and working in a short time. The model is skilled on large textual content corpora, making it highly efficient in capturing semantic similarities and textual content relationships. Additionally, we benchmark end-to-end structured era engines powered by XGrammar with the Llama-3 mannequin on NVIDIA H100 GPUs. Security researchers have found a number of vulnerabilities in DeepSeek’s safety framework, allowing malicious actors to manipulate the mannequin by carefully crafted jailbreaking techniques. However, concerns have been raised about knowledge privateness, as person information is stored on servers in China, and the model's strict censorship on delicate topics.

Hodan Omaar is a senior policy manager at the center for Data Innovation specializing in AI policy. AI regulation doesn’t impose pointless burdens on innovation. If the United States needs to stay forward, it ought to acknowledge the nature of this competitors, rethink policies that disadvantage its own corporations, and guarantee it doesn’t hamstring its AI corporations from with the ability to develop. China’s AI firms are innovating on the frontier, supported by a authorities that ensures they succeed, and a regulatory environment that supports them scaling. While U.S. corporations may equally profit from strategic partnerships, they're impeded by an excessively stringent domestic antitrust surroundings. We believe the pipeline will benefit the industry by creating better fashions. I will consider adding 32g as nicely if there is interest, and once I've completed perplexity and evaluation comparisons, however right now 32g models are still not absolutely tested with AutoAWQ and vLLM.

댓글목록0